Anthropic vs. OpenAI’s photo finish 📸

Plus a brief discussion of evaluation and Wanderly Premium

I recently upgraded the Wanderly text generation from OpenAI’s large language model (LLM) GPT-3.5 Turbo to GPT-4… but the real news here is how dark horse Anthropic almost beat AI darling OpenAI for Wanderly’s story engine.

A few months ago, I came across a Reddit thread called “Claude is criminally underrated” and a tweet talking about how Claude can better replicate an authentic-sounding “voice”. And so I started exploring Antrhopic’s suite of models…

Wanderly needs to tell educational, immersive stories

The goal of Wanderly is to tell stories that are so good that a child learns while they are entertained. With so many demos of large language models telling children’s stories, you might think that achieving this goal is easy, but it hasn’t been.

The hardest problem to solve has been getting an LLM to respect reading levels. Children (especially those learning to read independently) need to read at a challenging, but not discouraging level. Because LLMs are mostly trained on documents written for adult audiences, they naturally tend to sound like they are writing for an adult audience. I’ve had to do a lot of prompt engineering to make sure Wanderly’s stories use the right language for children.

For instance, GPT 3.5 loves adverbs… Even when I ask GPT 3.5 for simple text for a Kindergartener, it’ll often throw in a “suddenly”, “mysteriously” or “mischievously”. Then, the text complexity would drift higher as the stories got longer. This bothered me, especially as an educational product. I want more consistency for my users.

GPT 3.5 only followed my story structure guidance ~85% of the time. When it didn’t follow my instructions, the plot of each story got a little … weird. There are “temperature” or “chaos” parameters that can make responses more predictable but also make them less creative.

Lastly, occasionally, I’d get weird artifacts in GPT 3.5’s responses that would break the reader’s immersion in the story. For instance, at the top of a page, I’d get something like “Alanna just decided: ______” instead of integrating the choice seamlessly into the story.

A subjective framework for objective assessment

When I started playing with Claude, it initially just felt better. In retrospect, this was a red flag for my biases creeping in. But, after a couple of encouraging test queries, I decided to start the engineering work to fully integrate Anthropic (partially because there’s no Claude API playground like OpenAI).

After getting everything working, the cracks started to show. When you view an LLM’s output at scale, you realize you can’t cherry-pick your favorite responses (like you see all over Twitter). Every response is a dice roll; some are good and some are bad. You have to evaluate responses in aggregate, ideally with a large sample set. I needed to find objective criteria1 to decide across all available LLM providers.

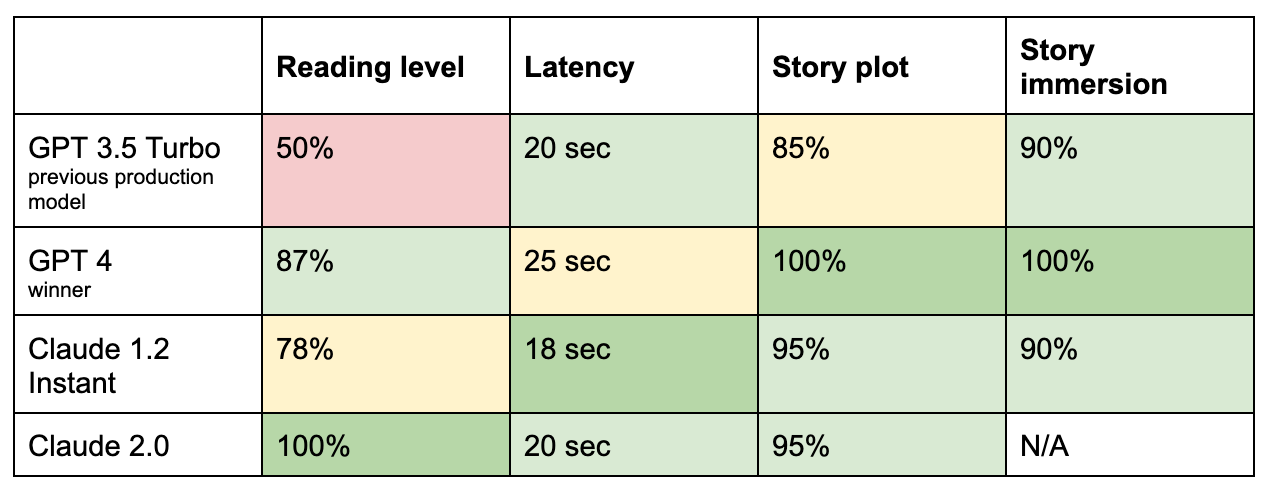

So, I came up with a framework. Based on Wanderly’s needs, I decided to evaluate models based on language level reliability, latency, story plot adherence, and story immersion.

Here’s how each one performed:

The first thing that might jump out at you is: “Wow, Claude 2.0 was crushing it on a lot of things, but why is there a weird N/A in that last column?” The answer is Claude 2.0 was doing some amazing work, but this model has a fatal flaw for Wanderly: It reallllly wants to be an assistant, not an API. That means that every response started with “Here is your ______” or “Sure, I’d be happy to ____.” I was terribly frustrated by this. I tried giving Claude 2.0 instructions to remove the preamble, but it changed the prompt so much that it degraded reading level quality2.

Claude 1.2 was actually a huge improvement over GPT-3.5, and so was GPT 4. Claude 1.2 is also cheaper and faster than GPT 4. But there were two things that really put the nail in the coffin for Claude 1.2:

It sometimes added artifacts like adding the title of the story on the first page.

It hallucinated some science facts3.

I also found GPT-4 doing some nice, creative things in the story prose while still adhering to my story structure guidance. That was a cherry on top of my final decision to go with GPT-4 for Wanderly’s story engine.

Anthropic’s models are impressive, but Claude is a weak product

So, while I think Anthropic’s models are very close in quality to OpenAI’s for children’s stories, this post would be incomplete without covering the product and business providing the models. Claude’s API felt incomplete and underresourced:

The developer and support ecosystem is thin and difficult to navigate:

There aren’t a lot of code examples of Anthropic API online. By contrast, OpenAI has a neverending forest of YouTube and blog tutorials.

The Anthropic Discord is very quiet. I encountered one Anthropic employee who was extremely helpful but didn’t encounter a community helping each other.

Many infrastructure tools don’t fully support Anthropic (like Helicone, my favorite infrastructure tool; side note: it’s amazing)

Anthropic’s console lacks an API playground and clear communication about payment.

It took 3 emails, 2 weeks, and a ping on LinkedIn before I was able to upgrade my Anthropic API keys to a non-test environment.

Overall, I was surprised by how well Anthropic’s models did given how less popular it is. The biggest flaws seem like product flaws more than model flaws. I sincerely hope that the team at Anthropic will listen to developer feedback and build more flexibility and tools in the coming months. Then, I fully intend to return to this choice and would be delighted if the underdog won. I look forward to Anthropic being a formidable competitor, pushing forward the industry.

A side note on scalable evaluation

This whole analysis took way too much time. I’m glad I did it and plan to do it again, but it brings up a hot topic in the space of Generative AI: evaluations. How do you know if a model is better? How do you know if a prompt is better? How do you know if your fine-tuning is working?4

Right now, it seems like a lot of builders are doing exactly what I’m doing: manual inspection. Some tooling systems, like Helicone and Freeplay.ai, are trying to build test suites, user feedback reporting, and A/B testing frameworks. I’ve even heard of folks using LLMs to evaluate LLM responses, which I’m probably going to try next time. But the answer is: No one has figured it out yet. What a time to be building. 😅

A Wanderly Update

Other than rolling out this major story engine update, I’ve made a couple of small changes:

By popular request, added an Annual plan. I found this awesome writeup on how to think about annual pricing, but since I don’t know my average customer churn yet, I chose a 25% discount and we’ll see what happens. 🤷♀️

I put multi-child profiles behind a paywall since it really only makes sense if you’re going beyond 5 free trial credits and it was my #1 question re: Premium plans.

I put an interactive demo using Storylane on the Wanderly marketing page, and I’m tracking to see what impact it might have.

I also just got access to DALLE-3 less than 24 hours ago, and it’s more amazing than I was hoping. I think it’s going to unlock some magical things for Wanderly. Stay tuned for fun!

Info Diet

There’s been some discourse about whether LLM wrapper startups (like Wanderly) will fail or thrive. Two different takes: The Information and a thoughtful tweet.

Anthropic is releasing research about understanding how LLMs “think”

Base models make a difference in the ability to self-improve on a task

Image input for ChatGPT opens up new avenues for prompt injection attacks

If you want to enjoy something completely tangential, here’s an amazing visualization about how Daft Punk sampled another song to create One More Time.

These framework items are subjective, even though I’m using them to be “objective”. So much of going from 0 → 1 is making things concrete. You have to be comfortable not knowing the right metrics, inventing them, and then reinventing them as you learn more.

So to the LLM product folks out there: If you’re building an API, let folks get completions without it always sounding like an assistant; it makes the model way less flexible.

But wow, it was a really cute story about a moon rabbit. 🐰🌕

You might be curious about why I'm not fine-tuning a model for Wanderly. A couple of reasons: 1) I can get pretty far with prompting, 2) it's expensive, 3) it requires a lot of training data that I don't have, 4) I personally think it reduces response creativity, and 5) The models are improving so fast, I think my time is best spent iterating on core models and waiting for the next version to come along.